A data-based large-scale model for primary visual cortex enables brain-like robust and versatile visual processing

Abstract

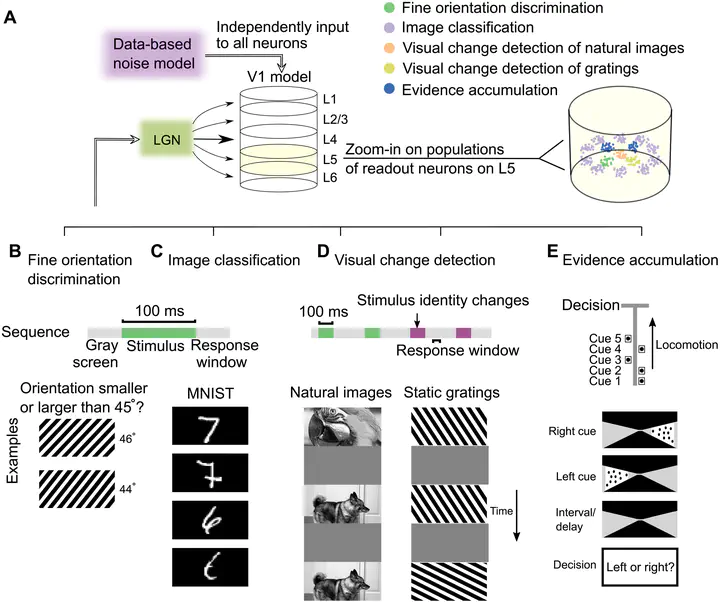

We analyze visual processing capabilities of a large-scale model for area V1 that arguably provides the most comprehensive accumulation of anatomical and neurophysiological data to date. We find that this brain-like neural network model can reproduce a number of characteristic visual processing capabilities of the brain, in particular the capability to solve diverse visual processing tasks, also on temporally dispersed visual information, with remarkable robustness to noise. This V1 model, whose architecture and neurons markedly differ from those of deep neural networks used in current artificial intelligence (AI), such as convolutional neural networks (CNNs), also reproduces a number of characteristic neural coding properties of the brain, which provides explanations for its superior noise robustness. Because visual processing is substantially more energy efficient in the brain compared with CNNs in AI, such brain-like neural networks are likely to have an impact on future technology: as blueprints for visual processing in more energy-efficient neuromorphic hardware.