Biography

At present, I am a research scientist at Huawei Technologies Switzerland. There, I develop algorithms for machine learning and investigate which principles of brain computation and cognition hold a promise to advance artificial intelligence.

Previously, I completed my PhD with Prof. Wolfgang Maass at the Institute of Theoretical Computer Science at TU Graz, Austria, where I studied learning processes and designed models thereof from a neuroscientific perspective.

You can reach out to me at: me [at] franzscherr [dot] com.

- Deep Learning

- Neuroscience

- Artificial Intelligence

-

PhD in Computer Science, 2020

Graz University of Technology

-

MSc in Computer Engineering, 2018

Graz University of Technology

-

BSc in Physics, 2018

Graz University of Technology

-

BSc in Computer Engineering, 2016

Graz University of Technology

Featured Publications

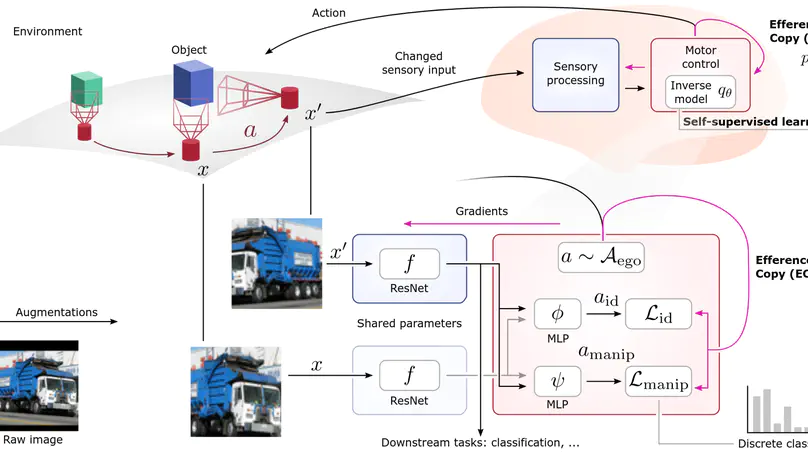

Self-supervised learning (SSL) methods aim to exploit the abundance of unlabelled data for machine learning (ML), however the underlying principles are often method-specific. An SSL framework derived from biological first principles of embodied learning could unify the various SSL methods, help elucidate learning in the brain, and possibly improve ML. SSL commonly transforms each training datapoint into a pair of views, uses the knowledge of this pairing as a positive (i.e. non-contrastive) self-supervisory sign, and potentially opposes it to unrelated, (i.e. contrastive) negative examples. Here, we show that this type of self-supervision is an incomplete implementation of a concept from neuroscience, the Efference Copy (EC). Specifically, the brain also transforms the environment through efference, i.e. motor commands, however it sends to itself an EC of the full commands, i.e. more than a mere SSL sign. In addition, its action representations are likely egocentric. From such a principled foundation we formally recover and extend SSL methods such as SimCLR, BYOL, and ReLIC under a common theoretical framework, i.e. Self-supervision Through Efference Copies (S-TEC). Empirically, S-TEC restructures meaningfully the within- and between-class representations. This manifests as improvement in recent strong SSL baselines in image classification, segmentation, object detection, and in audio. These results hypothesize a testable positive influence from the brain`s motor outputs onto its sensory representations.

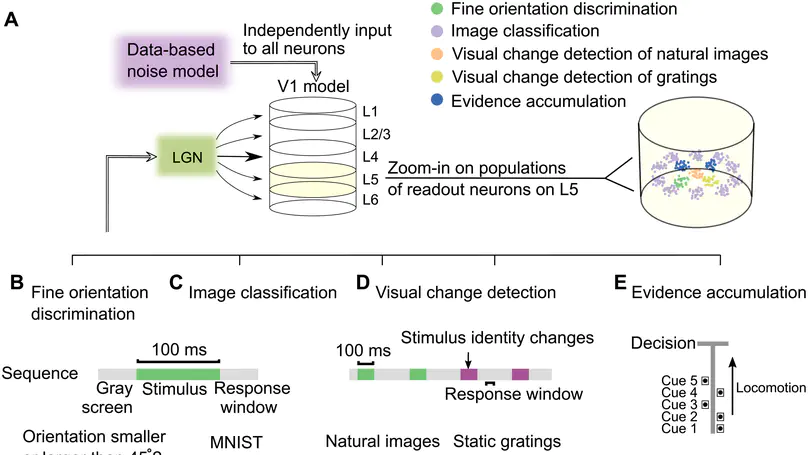

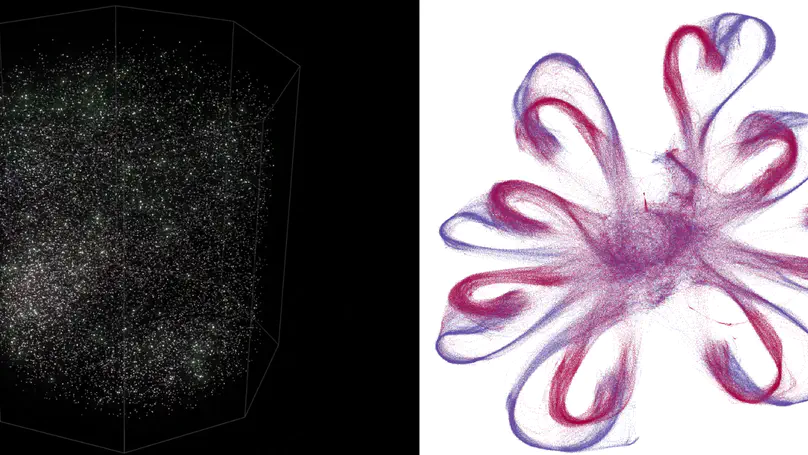

We analyze visual processing capabilities of a large-scale model for area V1 that arguably provides the most comprehensive accumulation of anatomical and neurophysiological data to date. We find that this brain-like neural network model can reproduce a number of characteristic visual processing capabilities of the brain, in particular the capability to solve diverse visual processing tasks, also on temporally dispersed visual information, with remarkable robustness to noise. This V1 model, whose architecture and neurons markedly differ from those of deep neural networks used in current artificial intelligence (AI), such as convolutional neural networks (CNNs), also reproduces a number of characteristic neural coding properties of the brain, which provides explanations for its superior noise robustness. Because visual processing is substantially more energy efficient in the brain compared with CNNs in AI, such brain-like neural networks are likely to have an impact on future technology: as blueprints for visual processing in more energy-efficient neuromorphic hardware.

The neocortex can be viewed as a tapestry consisting of variations of rather stereotypical local cortical microcircuits. Hence understanding how these microcircuits compute holds the key to understanding brain function. Intense research efforts over several decades have culminated in a detailed model of a generic cortical microcircuit in the primary visual cortex from the Allen Institute. We are presenting here methods and first results for understanding computational properties of this largescale data-based model. We show that it can solve a standard image-change-detection task almost as well as the living brain. Furthermore, we unravel the computational strategy of the model and elucidate the computational role of diverse subtypes of neurons. Altogether this work demonstrates the feasibility and scientific potential of a methodology based on close interaction of detailed data and large-scale computer modelling for understanding brain function.

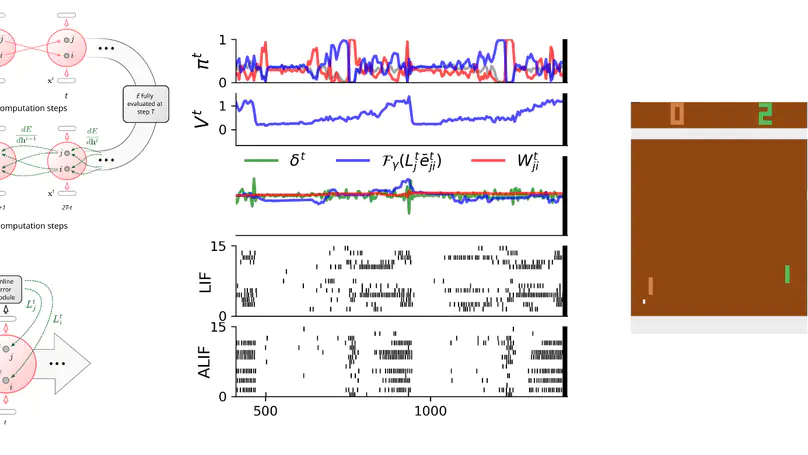

Recurrently connected networks of spiking neurons underlie the astounding information processing capabilities of the brain. Yet in spite of extensive research, how they can learn through synaptic plasticity to carry out complex network computations remains unclear. We argue that two pieces of this puzzle were provided by experimental data from neuroscience. A mathematical result tells us how these pieces need to be combined to enable biologically plausible online network learning through gradient descent, in particular deep reinforcement learning. This learning method–called e-prop–approaches the performance of backpropagation through time (BPTT), the best-known method for training recurrent neural networks in machine learning. In addition, it suggests a method for powerful on-chip learning in energy-efficient spike-based hardware for artificial intelligence.